Addy.ai

The wisdom of the world at your fingertips…

Company: TenPoint7

Timeline:

Originally a 3 month contract, extended to full time employment as the project became ongoing

Overview:

Redesigning an internal Natural Language Processing (NLP) tool as a SAAS product for self service use

Background

Addy began as an internal tool for TenPoint7 analysts, used to create Latent Dirichlet Allocation (LDA) topic models. The analyst would add documents to a corpus, either by uploading files, adding specific URLs, or with a web search. Addy would then use Natural Language Processing (NLP) to read the hundreds or thousands of documents, organize them into topics, perform sentiment analysis, identify top words and entities, and create data visualizations. The analysts would then dig into the subject, easily finding insights which would then be presented to clients.

As the tool improved, it was decided to turn it into a self service product. The development team in Vietnam created a GUI and Addy was released as a beta product. However, the beta program struggled to gain traction, pushing the company to look for a UX designer. This is where I come in…

My Role

For the first couple months of the project I worked alongside my mentor, Carrie Kim. She worked on Addy’s branding and the product’s marketing site, while I owned the application redesign.

After Carrie left the project, I became the sole designer for Addy.

Approach

Carrie and I worked together on a project proposal. We took an agile design approach, beginning with research, then ideation, sketching, wireframing, and prototyping, testing the designs, and discussing with stakeholders - iterating throughout the process.

Target Market

Beginning as an internal tool, Addy had been deigned for analysts. While it proved its value to our team, we didn’t yet know who exactly it might resonate with in the market, so the sales team wanted to cast a wide net. Because this was uncertain, and changed a few times over the course of the redesign, I created more general personas focused on the type of work being done rather than specific job titles. While they lacked helpful specifics, they were flexible enough to remain relevant throughout, even with moving targets.

Research Strategy

Addy is a big and complex product, so the first step was to familiarize myself with it. There was a fair bit of data already collected to review, including surveys and feedback from Beta users, feedback and ideas from the TenPoint7 analysts it was originally created for, and a recorded focus group. The company also had 2 more focus groups already organized, so I sat in on those and took notes. Additionally, I planed to do several contextual inquiries, user interviews, and stakeholder interviews.

Product Audit

The product audit served two purposes: the first being a chance for me to dig into the product and familiarize myself with all of Addy, and the other purpose was to serve as a reference for the rest of the project. I took screenshots of every screen in Addy, creating a digital inventory, as well as a printed version on the wall. Both denote hover and click interactions, demonstrate the information architecture and user flow, and allow one to look at all interactions on a page at one time.

One section of the inventory

Beta Program Feedback

Before I started, the team had collected feedback from our beta users. Reading through the comments and questions of beta users, several themes emerged. While people were excited about Addy, they had trouble getting started and learning how to use the tool to get the insights they were looking for, with many users saying they struggled to understand what to do when they started.

It also seems like a lot of the problems people wrote about stem from having built a poor corpus. One user wrote “It became obvious right away that the quality of the data source determines the quality of the insights. What is the "optimal" amount of content?”. I think this contributes to negative comments like topic labels being too general, or visualizations not being useful.

Takeaways

Current onboarding and instruction methods are insufficient for most users to understand the tool without additional and significant time and effort.

Making mistakes or poor choices in model building triggers further issues and frustrations when interpreting results.

Focus Groups

The business team planned to hold focus groups with UW students, with marketers, and with analysts. Before I started, work had begun on a new feature similar to a text editor (called “workbench”) with the ability to insert excerpts from the topic model (“clips” or “insights”). The focus groups were meant to find out how people felt about these new features and their names, and also to gauge the effectiveness of messaging on social media and the website.

Takeaways by group:

Contextual Inquiries and User Interviews

I did contextual inquiries with 3 people who had never used Addy before, and 2 interviews + observation sessions with experienced internal users. The sessions with new users were incredibly valuable. They gave a lot of feedback. To organize it, I logged each comment in an airtable spreadsheet (embedded below) with tags for the type of comment (question, suggestion, frustration, etc.), the section of Addy it pertains to, and the severity.

Then, using color coded dot stickers for each comment, and labeled with the corresponding number on the spreadsheet, I added the stickers to my printed inventory. Being able to actually see where people were having issues, and where they were concentrated was hugely helpful in understanding things at a more macro level, and later when I was working on the new design.

Research Synthesis

Addy is an extremely versatile tool, however this makes it difficult for a beginner to learn. While users liked Addy and were excited about its possibilities, there was a lot of confusion and difficulty in actually using it. There’s no one problem making Addy hard to use, but a combination of many factors leading to uncertainty in what to do and why. So, rather than name all of the specific issues, I thought it would be best to organize areas for improvement into overarching design themes.

Instruction, because users struggled to understand what to do.

Customization, because we have many types of users with different needs.

Simplicity, because again and again users said it was too complex.

Clarity, because even when users were doing the correct things, they were uncertain.

Context, because it wasn’t clear to users how different pieces worked together.

Collaboration, because it was something that was requested, and part of the long term vision for the product.

a poster I hung in the office

Problems to Solve

1. Users don’t know what to do or how to get started

2. Users don’t want to do the work of writing a boolean query (which can be key to getting a good result)

3. Users struggle to complete the model building process

4. Users can’t tell that the filters on “Summary” are filters

***

User Flow

One of the first things I did was look at the user flow and information architecture of the app. In the contextual inquiries, it was difficult to measure the effectiveness of the workflow because each individual step was often a struggle. Because the current workflow worked well for our internal users and it was difficult to test with new users, I decided to leave it more or less as-is in the short term, planning to assess it again after the release of the initial redesign.

(View larger version https://overflow.io/s/2D2UAL)

Ideation

Build Wizard

Before users can really dive into Addy, they have to build a topic model. In the original version, there was a page to name the model, a page for web searches, and a page for direct URLs, APIs, file uploads, review of corpus, and date selection. People had difficulty on the web search, but this last page was a big issue. There was a lot going on, and just seeing all the things they had to figure out, it overwhelmed users.

I decided to separate each step out to its own page, and then added a step at the beginning for users to select which data input methods they’d like to use. That way, they only have to see the steps that are relevant to them.

Advanced Search - Auto-boolean

Through my discussion with the analysts, it seemed like the consumer users were more likely to use the web search like they would google, searching for phrases like “world war 2”, while enterprise users would do advanced searches to exclude words, search specific words, or specify websites to be included in the search.

With “simplicity” in mind, I decided to make the text inputs expandable, to decrease the cognitive load for users not interested in adding these additional filters to their search. When expanded, there are text inputs for including and excluding words/phrases, and an input to search within specific domains. The user would type what they want to include or exclude, click “add” and that input would be added to the query with the proper boolean formatting.

Overview Page

Many users were using the filters on the summary page as visualizations or summarizing lists to get an overview of the model, not realizing that they are clickable filters. I decided to create a page specifically for this type of high level summary, calling it “overview”. Because the Summary page was no longer a summarizing page, the team met to brainstorm alternatives, settling on “Discover”.

I spent a lot of time considering what to include on the overview dashboard. I made roughly proportional cutouts of the charts and lists I was considering, and moved them around taking pictures of potential layouts. I chose several that I liked best, and made wireframes to show in the stakeholder design review for feedback.

Discover

With Overview being more of a summary, we changed the “summary” tab to “discover”. The main issue on this page was that people didn’t know the filters were filters. To address this, I chose to use progressive disclosure to get users used to their selection effecting what is displayed. I also made a column for the filters and titled it “filters”, which helped. First the user would choose a topic, then the filters panel becomes visible along with the document results.

Before selecting a topic

After selecting a topic

Help Panels

Arguably THE biggest problem for users is not knowing what to do, or what something does. To address this, I added an omnipresent slide out help panel. This is available on every screen, and there are also question mark icons next to things that the user might want to know more about. When they click a question mark, the help panel slides out, highlighting the corresponding text.

D3 Visualizations

Addy had many data visualizations, most of which were included just because they were available assets. Some, like the termite plot, users found interesting in testing, but not useful or informative. So, aiming to simplify, I wanted to remove unnecessary visualizations. I started by making a list of all of them, and sorting through which were useful to each proto-persona and eliminating charts not useful to either.

We used D3 for all of the visualizations in the app, so I also looked through to see if I wanted to include any new ones. I decided to add the Sunburst chart for the Overview page because it could combine several tiers of information from the Discover page. The first level was for the topics, the second level was each topic’s top words, and the third level was the top word’s similar words.

The colors on the charts needed some changes too. In the original version, to indicate selection, the bar on a bar chart would get a bit lighter. Users weren’t noticing this, and since there could be up to 15 topics shown, there were dark and light versions of most colors already in use. Based on “Practical Rules for Using Color in Charts” by Stephen Few, I started by making a number of charts one color (with another color indicating selection), instead of multicolor, since the colors were not communicating anything to viewers. However, there were still some cases where it was important to show up to 15 different colors, such as the segmented bar charts. Since chart colors should be hues as distant from one another as possible, this palette was a bit difficult. Ultimately for categorical charts, I used i want hue, a tool that uses k-means and Force Vector algorithms to create “optimally distinct” color palettes of any size. For sequential/quantitative charts, I used the well known Color Brewer tool.

Top Topics bar chart before (left) and after (right). Both have the first topic selected.

After consulting with the lead data scientist, percentages were removed in the new version because they weren’t explained or easily explainable to users, and were often misinterpreted.

Color Palettes for Visualizations

Wireframes

After working through my initial ideas, I made lower fidelity wireframes to later present to stakeholders.

(A small sample of the wireframes)

Stakeholder Design Review

I presented these initial wireframes to the stakeholders, asking for feedback. Many sections had multiple versions, solving the problems in different ways. Addy is a big application, so to help everyone remember their feedback, I printed out note taking packets that follow the presentation with spots to rate designs and jot notes.

Validation Testing

After getting feedback from the team, I increased the fidelity on the wireframes that would move forward, made some iterations based on feedback, and uploaded them to Invision for testing. (prototype available here)

I tested the prototype with 4 people. The test went well, and I collected some good qualitative feedback, logging suggestions on Confluence.

When asked to rate the tool’s ease of use, the average score was 8.5/10.

Final Mockups

After the successful validation tests, I further increased the fidelity, making a few small tweaks, producing hi-fi mockups to pass off to the development team.

(A small sample of the mockups)

Handoff

With the designs ready for development, I headed off to Ho Chi Minh City, Vietnam to meet with the dev team in person, walk them through the design, and kick off development.

Over the course of 2 afternoons, I presented the new design screen by screen, going over interactions and explaining some of my reasoning. The developers asked questions as we went, and where they identified concerns, we talked through any potential problems and how we might solve them.

By the end, everyone felt heard and we had a planned course of action. I would make some final tweaks to the design based on some of their feedback, and they would begin development in the next sprint.

Team Lunch in HCMC

Since we work in different timezones, most of our communication would be written. I decided to use Zeplin for the specs because the ability to place comments directly onto the mockups would make it easier to communicate about a specific thing without as much back and forth explaining what the question was about.

With many people using Zeplin, and a lot of discussion going on there, I decided to color code the comments as follows:

Purple - Details

Blue - Interactions

Yellow - Question, low urgency

Orange - Question, medium urgency

Red - Question, high urgency

On pages with a lot of comments, this made it a lot easier to understand what was going on at a glance.

Product Launch

Addy officially launched in January 2020.

The original beta program had somewhere around 100 participants. They all needed 1 on 1 support, with only a couple continuing to build their own models after multiple sessions with analysts.

Between January and March 2020, over 100 users signed up for a free trial, with 82 of them building topic models unassisted.

Promo video for the student campaign

User behavior

During this time, I used Hotjar to understand how real users were using Addy. Our signups were high, but so was our churn rate. Looking at the session recordings, the problem was clear - almost all of the users were on mobile devices. We had planned to eventually create a mobile version of addy for limited tasks, but it had been a low priority - analysts and students did their research on computers, right? It had previously seemed that way based on those we talked to and met with, but the data said otherwise.

Again and again, I watched people sign up and sign in on mobile phones, only to tap around, see that the UI was definitely not for mobile, and then leave, rarely returning on a computer. So, I started working on a plan to address this.

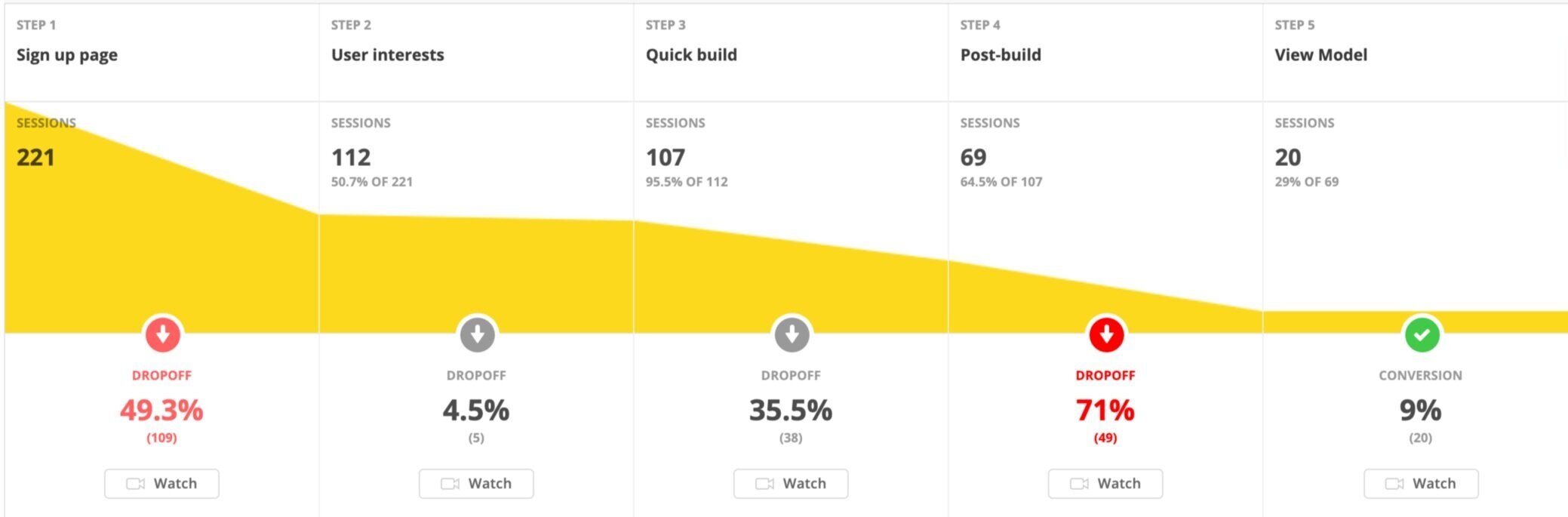

A funnel tracking users from sign up to viewing results of their model

Addy Mobile

To quickly address this issue, I decided to design mobile versions of just the first screens users would use - Sign up, log in, onboarding, quick build, and build status. After they started their model running, a popup would tell them that they could view their finished model on the full desktop version of the app.

In the MVP, users would not be able to view projects or models on mobile. These screens would be very complex to covert to mobile, but we would consider it later. For now, we wanted to get users going, and then let them know that Addy is intended for desktop use.

Addy’s Untimely End

In March 2020, the company lost funding amid the COVID-19 crisis, ultimately shutting down by the end of the month.

Earlier that week, we had started development of the mobile update.

Retrospective

I loved working on Addy, and learned a lot in the process. I still believe in our mission to make NLP accessible to anyone curious, and I really enjoyed working on something that required me to learn about and understand aspects of machine learning.

Working with an offshore team

I had great access to managers, data scientists, and the business team, but I would have liked to have had a closer working relationship with the offshore dev team. If I did it over again, I would try harder to make that happen. During the development of the redesign, I started a Slack channel called Dev&Design to share early sketches of new features and get some early feedback, but it would have been most useful months earlier when I was working on the big overhaul. More frequent video meetings would also have helped in building a stronger team connection.

Mixed Target Audience

A big part of Addy’s product vision was to be “accessible to anyone curious”. The challenge was to design something simple enough for a broad consumer group, but powerful enough for enterprise use. In a way, those analysts are consumers too, and would likely appreciate a simple and easy to use product, but only if it still aided them in their more complex analyses.

Ultimately, I tried to use progressive disclosure to hide the more advanced functions from less technical users, but keeping it available to those interested. I had planned to add more customization in future iterations to better meet the needs of different groups, after launching the product and seeing who actually used it. Unfortunately, we never got that far.

I don’t know if more market research would have led to neater, detailed personas and a better targeted product, but I do think it would have at least led to valuable knowledge. Going forward, this is something I’d push harder for.

Designing for AI/ML

One of the most interesting parts about Addy was learning how it worked. As someone really interested in data science, I loved learning about the NLP that created Addy’s topic models. This understanding was important as a UX designer - it allowed me to consider the full range of the user’s experience. What if we added a hierarchical clustering algorithm to the pipeline in order to use dendrograms to let users select the number of topics in their model? Could we used semi-supervised learning to allow users to define the topics they expect, then let Addy fill in the rest?

Considering these and other possibilities was exciting and deepened my appreciation for how powerful these technologies can be for data scientists, but also for consumers. I think of AI today as being like early computers - totally game changing, but nearly only accessible to those who can code (and have studied math or engineering, in the case of AI). But I believe we can, and will, continue to give it friendlier and friendlier interfaces, increasing accessibility so that anyone, regardless of technical ability, can use this incredibly capable technology themselves.

What was next…

We’d been making plans for future iterations up until the very end. Some of those plans were:

Seeing how user behavior changed after launching the mobile update. Then looking for other problems contributing to churn.

On March 17 I launched a Hotjar Poll to collect data on how users wanted to use Addy. I hoped to use the results to determine which new features we should start with, and what in the existing design might not support the goals of our real users and needed more thought.

I’d recently started working on a more comprehensive onboarding process with a guided tutorial that users could toggle on and off as needed.

Adding powerful collaboration abilities.

Adding the ability to created Supervised Learning models in addition to the current unsupervised LDA method.

Building a community for people to create and share their models.

Working with developers to ensure all UI elements are standardized, and creating a robust and scalable design system.